“Perfection is achieved, not when there is nothing more to add, but when there is nothing left to take away. “

— Antoine de Saint-Exupéry

Beyond Healing: The Cycle of Rest, Repair, and Growth

Healing is sometimes seen as a permanent state, something we enter and never leave. But what if healing is just one phase of a deeper rhythm? This post introduces a new perspective on personal transformation, exploring cycles of rest, repair, and growth. It offers a clear, grounded perspective for those who are ready to stop circling their pain and start reclaiming their lives.

The Difference Between Individual Performance Management and OKRs: Why Both Matter

Performance management is about individual growth and accountability. OKRs are about collective ambition and alignment. Both matter, but they serve different purposes. What happens when we blur the line between the two? This post unpacks the consequences and offers a more straightforward path forward.

The Five Elements of Confidence in Decision Making

What makes a decision feel solid, clear enough to move forward, even in uncertainty? This post breaks down five key elements that foster true confidence in decision-making, encompassing clarity and input, and accountability. It’s a practical approach for anyone navigating this complexity.

Measuring the Impact of AI on Operations

Many leaders ask, "How does AI fit into my business?" This post reframes it: AI isn't just an add-on, it's a catalyst for accelerating outcomes. By using the "Focus Factor", you'll see where AI automates routine tasks and unblocks creative capacity. The result? More time for innovation, higher-quality work, and a team freed up to build what really matters.

Curious where AI could unlock hidden capacity in your organization?

Hitting the Wall

This post examines the consequences of growth outpacing infrastructure, drawing on insights from a paper co-authored with Scrum co-creator Jeff Sutherland. When a team hits a wall due to broken CI/CD and mounting tech debt, the solution isn't to slow down, it's to form a dedicated Ops Scrum to fix the foundation while delivery continues.

Discover the key to scaling without compromising speed.

Feature Velocity and Forecasting

Teams often ask: “When will this be done?” This post transforms that question by spotlighting feature velocity, which is the amount of explicit value consistently delivered per sprint. By tracking over time and spotting trends, you can forecast future deliverables more reliably. The payoff? Better planning, fewer surprises, and a team that delivers promises instead of chasing deadlines.

Curious how steady velocity could make your roadmaps rock-solid?

Why Normalizing Velocities Doesn't Matter

Ever assumed your team’s sprint velocity is set in stone? This post challenges that assumption, showing why treating it as a fixed number can mask shifts in efficiency, scope, or team health. Instead, you learn to view velocity trends as signals, so you can spot process issues early and adapt before they derail your predictability.

Curious how tuning your velocity lens could surface hidden insights in your delivery rhythm?

Why We Measure

When we measure, it's not just numbers, it's clarity. This post examines why measurement isn't just about checking boxes, but about illuminating what truly matters: performance, progress, and informed decisions. Whether you're tracking team health, outcomes, or process quality, the value lies in turning data into insight.

So, the real question is: are you measuring to understand, or to report?

Minimally Marketable Feature

In this post, the idea is simple: don’t wait to achieve perfection. Focus on the smallest slice of functionality that delivers real value to users. By identifying and building Minimally Marketable Feature, you get user feedback early, guide smart iteration, and avoid bloated delivery cycles.

Curious how pinning down that minimal slice could transform your release strategy?

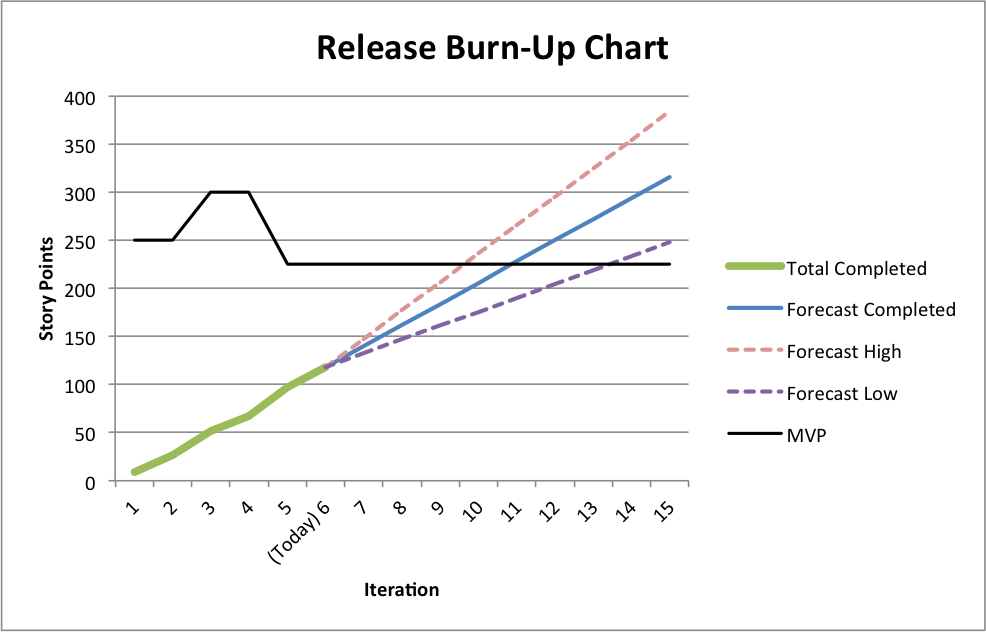

How to Create a Release Burn-Up Chart

Ever wish you could see progress and scope changes at a glance? This post guides you through creating a release burn-up chart, a visual representation that tracks completed work against the total scope. It's great for spotting scope creep, celebrating milestones, and keeping everyone aligned on real progress.

How does adding one clear chart boosts transparency and team confidence?

New Hampshire - 4000 Footers

This post chronicles my personal journey: a 10-year father-son pursuit of New Hampshire’s 48 peaks over 4,000 ft in the White Mountains. Covering nearly 300 miles, through all seasons and conditions, my son grows from an adventuresome 7-year-old into a confident, self-aware young man.

Curious about the NH48? Review the practical trail advice and be in touch regarding your own adventure!